Open Source HPC Benchmarking

Andy Turner, EPCC

22 May 2019

a.turner@epcc.ed.ac.uk

Slide content is available under under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

This means you are free to copy and redistribute the material and adapt

and build on the material under the following terms: You must give appropriate credit, provide

a link to the license and indicate if changes were made. If you adapt or build on the material

you must distribute your work under the same license as the original.

Note that this presentation contains images owned by others. Please seek their permission

before reusing these images.

Built using reveal.js

reveal.js is available under the MIT licence

Overview

- Introduction

- Open source benchmarking

- Benchmarking results

- Live demo

- Next steps

Introduction

Why benchmarking now?

- Lots of different HPC systems available to UK researchers

- ARCHER: UK National Supercomputing Service

- DiRAC: Astronomy and Particle Physics National HPC Service

- National Tier2 HPC Services

- PRACE: pan-European HPC facilities

- Commercial cloud providers

- A diversity of architectures available (or coming soon):

- Intel Xeon CPU

- NVidia GPU

- Arm64 CPU

- AMD EPYC CPU

- …(variety of interconnects and I/O systems)

Audience: users and service personnel

- Give researchers information required to choose right services for their research

- Allow service staff to understand their service performance and help plan procurements

Benchmarks should aim to test full software package with realistic use cases

Initial approach

- Use software in the same way as a researcher would:

- Use already installed versions if possible

- Compile sensibly for performance but do not extensively optimise

- May use different versions of software on different platforms (but try to use newest version available)

- Additional synthetic benchmarks to test I/O performance

Open source benchmarking

https://xkcd.com/225/

https://xkcd.com/225/

What is open source benchmarking?

- Full output data from benchmark runs are freely available

- Full information on compilation (if performed) freely available

- Full information on how benchmarks are run are freely available

- Input data for benchmarks are freely available

- Source for all analysis programs are freely available

Problems with benchmarking studies

Benchmarking is about quantitative comparison

Most benchmarking studies do not lend themselves to quantitative comparison

- Do not publish raw results, only processed data

- Do not publish details of how data was processed in suffcient detail

- Do not provide input datasets and job submission scripts

- Do not provide details of the how software was compiled

Benefits of open source approach

- Allows proper comparison with other studies

- Data can reused (in different ways) by other people

- Easy to share and collaborate with others

- Verification and checking - people can check your approach and analysis

Results

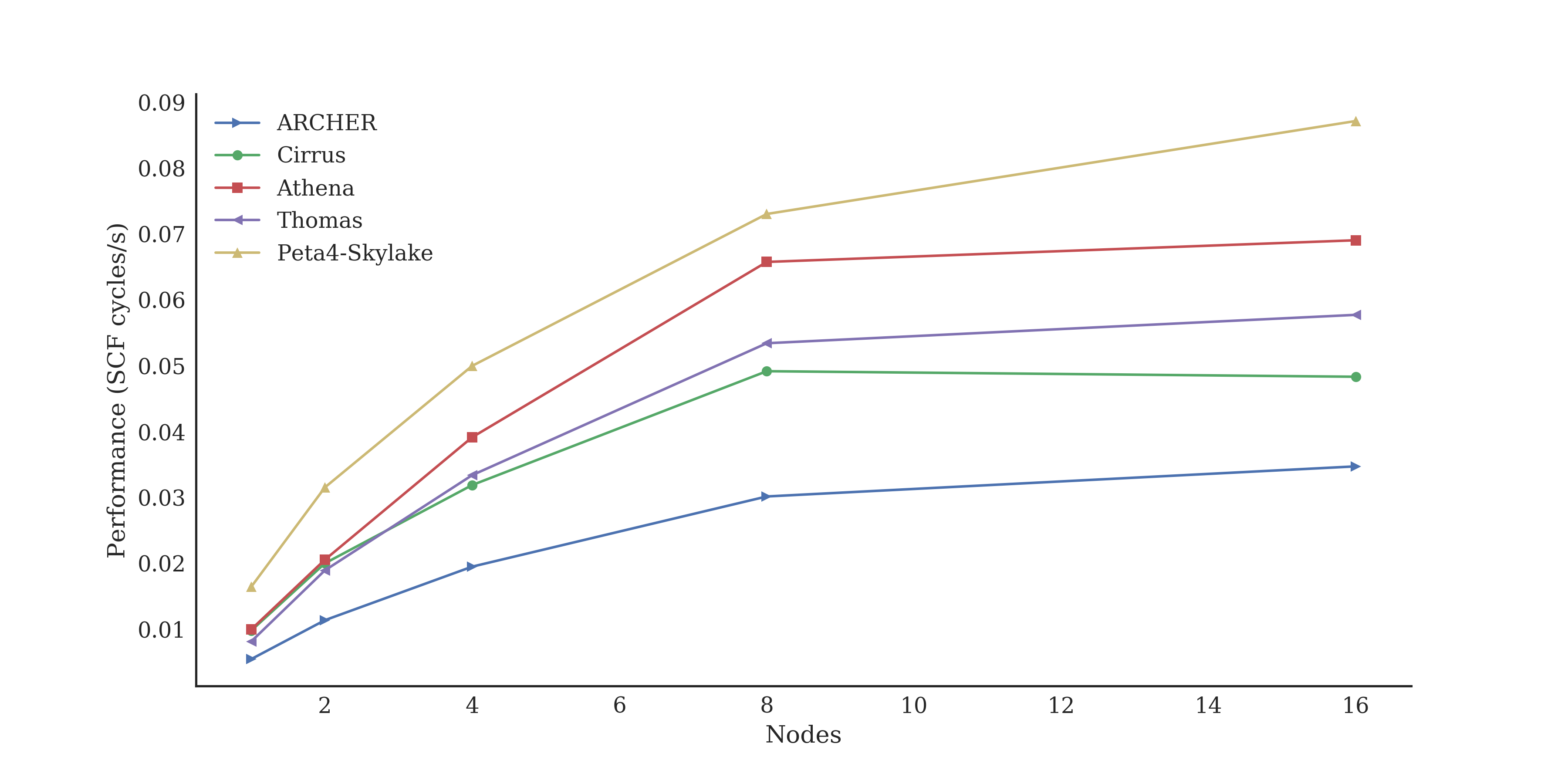

Multinode performance: CASTEP

Single node performance: GROMACS

| System | Architecture | Performance (ns/day) | cf. ARCHER |

|---|---|---|---|

| ARCHER | 2x Intel Xeon E5-2697v2 (12 core) | 1.216 | 1.000 |

| Cirrus | 2x Intel Xeon E5-2695v4 (18 core) | 1.918 | 1.577 |

| Tesseract | 2x Intel Xeon Silver 4116 (12 core) | 1.326 | 1.090 |

| Peta4-Skylake | 2x Intel Xeon Gold 6142 (16 core) | 2.503 | 2.058 |

| Isambard | 2x Arm Cavium ThunderX2 (32 core) | 1.250 | 1.028 |

| Wilkes2-GPU | 4x NVidia V100 (PCIe) | 2.963 | 2.437 |

Next steps

- Write a report on single node performance comparisons

- Run multi-node Arm processor tests as soon as systems are available

- Run benchmarks on commercial cloud offerings

- Include ML/DL benchmarks in set

- Perform performance analysis on existing benchmarks and add to repository

Information on facilities and how to access them

Open Source, community-developed resource

Benchmark Performance Reports

Performance of HPC Benchmarks across UK National HPC services

A Turner, EPCC, The University of Edinburgh

J Salmond, University of Cambridge

https://edin.ac/2Q1m7ot

Single Node Performance of HPC Benchmarks across UK National HPC services

A Turner, EPCC, The University of Edinburgh

https://edin.ac/2w8DCuW